Using AWS to solve your problems, case 376

I really don’t know why I didn’t think of it before.

I have been working on a project. It involves going through a bunch of company pages on crunchbase and trying to figure out what ‘industry’ or ‘category’ a company belongs.

Crunchbase has some 200,000 companies, so accessing it is not as getting something on an excel sheet. They do have an api though it is not really well documented and doesn’t always return json documents which are well-formed. Sadly, the latter post which I linked to was written about a year ago and nothing seems to have changed.

I ran into a bunch of hurdles, most of which had as their underlying cause the fact that it took forever to get all this data. I tried a variety of things to speed it up – write information to files for later processing, write information to a SQLite database for later processing, etc. but nothing seemed to stick.

I decided to revamp my approach over the last couple of days to leverage StarCluster and S3. StarCluster is essentially an easy way to spin up an EC2 cluster pre-configured with some applications to facilitate distributed computing. I wasn’t doing heavy distributed computing per se, but I did need to establish a whole bunch of connections to crunchbase, so having that option of multiple instances helped a lot. Similarly, I wanted a place to store those json docs I was retrieving (without having to worry too much about file i/o and/or network bandwidth.) Furthermore, I wanted my documents to be persistent, and not wiped out if I terminated my EC2 instance. S3 made this a natural choice.

This nice thing about this combination is how much less time it took me to troubleshoot things and do exception handling. Here are some good examples:

1. To check if I had already retrieved a document before, I had to write code like:

def fetch_company_file(cb_conn, company):

global COMPANY_EI, API_KEY, EXCEPTION_FILE, OUTPUTDIR

query=''.join((COMPANY_EI,company['permalink'],'.js?api_key=',API_KEY))

company_file=''.join((OUTPUTDIR,'/',company['permalink'],'.json'))

cb_conn.request("GET",query)

data=cb_conn.getresponse().read()

with open(company_file, 'r+') as f:

if len(f.read()) < 1:

f.write(data)

but now it’s more like:

def foo():

#keys on my s3 bucket are of the form crunchbase/data/company-name.json

keyname=''.join(('crunchbase','/data/',company['permalink'],'.json'))

#unambiguous checking for if my file exists and if I should skip it.

if keyname in [obj.name for obj in s3bucket.list(prefix=keyname)]:

continue

#Next 3 lines have no change from before

query=''.join((ep,company['permalink'],'.js?api_key=',apikey))

cb_conn.request("GET",query)

data=cb_conn.getresponse().read()

#just pass in a new key name and dump the variable data into it.

key = s3bucket.new_key(keyname)

key.set_contents_from_string(data)

2. It also helps me pretty easily recover if I experience a broken connection or something like that. I can now just start skipping through files left and right instead of figure out where I’d dropped my connection, and what would be the best place to start. I had originally tried a couple of ways around it, eg using a counter to keep a track of how man elements of the list of companies I had gone through, but I realized just splitting a couple of hundred thousand items over 16 computers was much better than doing any other kind of handling.

3. It frees up my computer! No more overheating computers! I can sit back and smoke a hookah for all I care.

Which is pretty much what I’m doing right now.

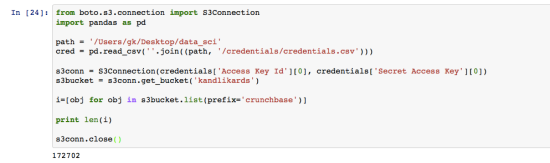

Except not really. I’m just sitting here and watching my EC2 cluster do its work. I already have 172K records. In my first attempt. I had not yet been able to score 20K records without this kind of parallel computing at my disposal!

I should be done in several minutes here. If I haven’t already passed out on the couch waiting for the counter to tick closer to 220K, I will try to post some summary statistics on the files. But don’t count on it.

Some of things with parallel computing are interesting. By which I mean things in python are interesting.

There’s this notion of Sync/Async execution which takes a lot of getting used to. Then there is functions which let you ‘scatter’ an iterable in a format (chunks or round robins, I think.). Then you can ‘push’ or ‘pull’ a variable from the remote clients and you can even move functions around this way. This last bit was a great find because it prevented me from having to write different sets of code.

The biggest thing I wanted out of this was to have the whole app contained within 1 cell of ipynb. I don’t know if this makes it a necessarily good thing, but I thought it is easier to read and you don’t have to explain to others what you’re trying to do because your code is all there.

Anyway, if you want to do cool things, don’t always try to solve it the conventional way, people have been spending a lot of time trying to make things easier for you. Start using those things!

-Gautam